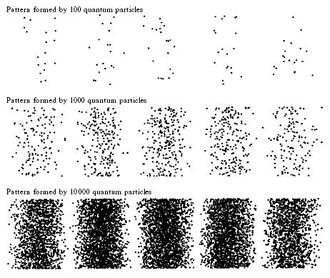

In this piece let us attempt to understand something that we hear now and then – but tend to think that those aspects are in the complicated domain of the frontier of science – that have no practical implications in our day-to-day living. This something is about the details at the minutest levels that define everything or has the great potential to do so – lives, Nature and the universe – and how they have evolved and continue to evolve – in the kaleidoscope of ever-changing canvas of cause-and-effect. What is Quantum Mechanics? Let us have a brief before moving forward. In simple terms – it is the science of the physics of motions of the minuscules – of all matter and immatter at their fundamental building blocks of existence – the atomic and subatomic particles (e.g. electrons, protons, neutrons, etc). At this scale – the particle motions are governed by the Electromagnetic Force Field (discussed further later). The dual characterization of particles – either as a wave-or-a Quanta – defines the uncertain motion mechanics of them in this force field. Such uncertain motion mechanics of fundamentals – have implications or consequences in the behaviors of everything that surrounds us or what we do - with the mind (immatter) being the matrix of all matter (see MKE Planck saying on the perception of matter, The Power of Mind). The world of Quantum Mechanics (QM) saw the light of exposure during the late 19th and 20th centuries with the observations (published in 1901) of German physicist MKE Planck (1858 – 1947; considered the Father of QM). The seed that he sowed together with two other German physicists, ERJA Schrödinger (1887 – 1961) and WK Heisenberg (1901 – 1976) Uncertainty – continues to sprout in several colors with the practical technological applications that are going to revolutionize, among others, the information age – in the speed of the way we compute and communicate. The key to such progresses was made possible by the groundbreaking Special Theory of Relativity, Einstein (Albert Einstein, 1879 – 1955) proposed in 1905 – that among others, established the space-time definition, mass-energy equivalence, and the universal speed limit. The pioneering works of these great investigators defined the beginning of modern science (20th century - ) – that came out of the shell of classical science (17th - 19th century). The classical science period is defined primarily by R Descartes (1596 – 1650) ‘Res Extensa’ or matter philosophy, and Isaac Newton’s mathematical elaborations. The technological upheaval afforded by the foundation they created – led rapid industrialization and defined the materialistic Western civilization – with its outreach to managing everything from economics to social development. The pioneering 20th century modern science based on Relativity, Quantum Mechanics and Uncertainty, in essence, combines the ‘Res Extensa’ with ‘Res Cogitans’ or the mind of Descartes philosophy. Although the science of this modern era is widely accepted by scientific community – and its technological incorporation and development have been or are taking roots – the Western social management methods are far from accepting and linking the matter with the phenomenon of mind – which primarily defines the Eastern philosophy – with its root founded upon the sophistication of Buddhist thoughts. With this brief outline, let us move forward. . . . The key to the development of QM is a technique described and applied by Einstein as the thought experiment – and it has been applied by many investigators. To understand the root of this technique one has to go back in time to the development of Buddhist metaphysics about 2.5 millennia BP. The technique is similar to what the Buddha (Gautama Buddha, 624 – 544 BCE) - The Tathagata taught as the Vipassana meditation (further developed as the Chan meditation in China, and Zen meditation in Japan; see more in Meditation for True Happiness) – which entails meditative focusing on an object to have insightful understanding of the nature of causes for its existence. The practice of Vipassana meditation yields fruit only when one has mastered mindful meditation – the technique of controlling and steadfastly grounding the mind on the object. In addition to the intellectual query as such, the development of QM has become possible because of the phenomenal technological advances and instrumentation that have allowed scientists to look deep into objects from the minutest existence – to the remotest corner of Earth and the farthest corner of the universe. But as the QM advances suggest, the world of minuscules works in a strange and mysterious way in the electromagnetic (EM) field – and as we shall see, one such mystery is that the observer (in this case the instrument) influences the observed. Here again a parallelism emerges between Buddhism (Yogachara school, 4th to 7th century CE) and QM – Buddhism saying that one’s experience of the observed is influenced by the construct of his or her mindset. Perhaps human mind works in the same length and breadth as the EM field. Similar parallelism can also be found in some common societal beliefs such as: beauty is in the eyes of the beholder – or that there is no beauty without a beholder. Or simply that everything is dark to a blind – no sound to a deaf – no smell to an anosmiac, etc. As one goes through the developmental history of QM, one would soon realize that this vibrant field has gone through back and forth – with theoretical physicists presenting conflicting theories, or sometimes supporting one another. But as experimental physicists reveal new observations – the theoretical physicists rush to re-interpret or re-formulate their theories. In the end, such processes yielded new theories or ideas most agreed – thanks to the contributions of many dedicated and committed scientists. Although I am saying this in the context of QM, they are ubiquitous in every branch of science and technology – that ideas and theories remain fluid subject to interpretation and re-interpretation until proven to an acceptable level of certainty (or uncertainty). My profession as an applied physical scientist and civil engineer within the coastal waters does not come close to the Quantum world. Therefore, this piece is an attempt to delve into the general understanding of the Quantum world in simple terms – by a non-astrophysicist or non-microphysicist. It is based on several sources including: S Hawking (1942 – 2018; A Brief History of Time, Bantam Books, 1995); AC Phillips (Introduction to Quantum Mechanics, Wiley, 2002); R Horodecki and others (Quantum Entanglement, 2007); D Kurzyk (Introduction to Quantum Entanglement, Journal of Theoretical and Applied Informatics, 2012); and several articles published in Wikipedia. Our world – let us say a civil engineer’s world is satisfied with the powerful deterministic paradigm of Newtonian (Isaac Newton, 1642 – 1727) physics, or the so-called classical physics. This reality has led French scientist PS Laplace (1749 – 1827) to declare that determinism is sound and solid, and is the only method needed to solve any of world’s problems including social relations [Further reinforced by the convictions of later scientists: American scientist AA Michelson (1852 – 1931; it would consist of adding a few decimal places to results already obtained), and British scientist WT Kelvin (1824 – 1907; everything was perfect in the landscape of physics except for two dark clouds)]. Despite declaration from such renowned scientists, frontiers of science did not stop questioning conventional wisdom – while at the same time looking for breakthroughs. . . . One such breakthrough was the Heisenberg paradigm of uncertainty. In a civil engineer’s world, many of our modern works are inspired by uncertainty (see the pieces: Uncertainty and Risk, and The World of Numbers and Chances on the Science & Technology page of WIDECANVAS) – a tool required and is helpful to avoid the risk of failure of engineering projects – by evaluating uncertainties of loads, strengths and the environment. The physics close to the Einstein’s famous mass-energy equivalence equation {e = m*c^2; e = energy, m = mass, c = speed of light ≈ 671 million miles per hour} is the equivalent of our use of the kinetic energy equation {ke = ½*m*V^2; ke = kinetic energy, m = mass, V = average speed of flow} of Daniel Bernoulli (1700 – 1782). Both the equations are based on the acceleration of particles caused by the non-linearity of trajectories in a frictionless motion. I do not know whether Einstein was inspired by Bernoulli, but the two equations are damn similar, in different contexts though – Bernoulli, in the application of Newtonian physics of fluid flow – and Einstein in the field of EM – governed by Maxwell law (Scottish scientist, JC Maxwell, 1831 – 1879). Perhaps this is another indication of the unification and universality of the laws of physics – for that matter, of any law of Nature no matter where one’s position is in space – whether in micro or macro world. The Correspondence Principle (that says QM theory reduces to classical-physics for large quantum numbers) proposed by Danish physicist NHD Bohr (1885 – 1962) in 1920, is one such example. Let us move on to the core content of this piece – before it becomes too lengthy. We have seen in several pieces of the WIDECANVAS that transfer of energy occurs through the distortion of a medium in a wave form – or simply that the energy propagates as a wave. Planck’s observations have revealed that electromagnetic radiation or energy transfer works as the motion of a photon – a particle-like quanta. This was further confirmed by American physicist AH Compton (1892 – 1962) and French physicist LD Broglie (1892 – 1987) in 1923. Their compelling observations contradict the common understanding that energy is transported in a wave-form – describable in frequency, amplitude and phase – and that the wave-processes transferring energy occur without any real movement of particles. The two different views of the same phenomenon pose a riddle. Scientists realized that one of the options to solve the riddle, was to revisit the two-slit experiment first used by British scientist T Young (1773 – 1829) in 1801. Application of methods similar to this, opened the vista to solve the riddle – and the findings have indicated the wave-particle duality of the same phenomenon – in other words, saying that the energy transfer in the EM domain can be viewed as the processes of a wave – but this is no different than the actual movement of particles or quanta. To demonstrate this, I have included an image from Phillips (2002) showing the wave-quanta duality. . . . What kind of magnitude are we talking about in the EM field? To be in perspective – it should be understood that wave lengths and energies in the EM field are exceptionally small beyond the scale of ordinary comprehension. For example, the visible light frequency range is about 430 - 750 THz (1 THz =10^12 Hz; 1 Hz = 1 cycle/second) with the wave length ranging between 400 and 700 nm (1 nm = 10^-9 m). The energy of an electromagnetic wave is given in electronvolt or eV (1 eV = 0.16 x 10^-18 J), and the energy of visible light is about 1 eV. A Joule (J) is the unit of energy in the SI system, and it is the energy transferred when a force of 1 newton (force required to accelerate 1 kg of mass at the rate of 1 m/s^2) moves an object by 1 m in the direction of motion. These extremely small orders of magnitude indicate something very interesting about the wave-quanta duality. Let us attempt to understand it. Einstein’s mass-energy equivalence equation and the orders of magnitude, immediately indicate that the mass of a photon or quanta must be extremely small – perhaps so incomprehensibly light that energy propels the photon to behave like a wave but in an uncertain way. Perhaps the wave-like oscillating behavior is the result of push-pull effect on the photon during its trajectory – similar like the balancing act of disturbing and restoring forces acting on an elastic system. In such a balancing act it is always possible to have the fluctuating dominance of one over the other at any certain time, resulting in uncertainty. Indeed, this is what NHD Bohr theorized as the Complementarity Principle in 1927 – that particle and wave behaviors complement each other – indicating the duality of the same phenomenon. . . . The uncertainty caused by such processes led Heisenberg to formulate his famous uncertainty principle in 1926. In the exact formulation, it says that the product of the standard deviations of particle position and momentum must scale with Planck’s constant (radiation energy is the product of frequency and a constant, Planck’s constant = 6.626 x 10^-34 J s), which means that positioning and momentum cannot have equal level of accuracy simultaneously. Let us attempt to understand it further through the simplified wording of Stephen Hawking: if one repeats measurements of the same system many times, and if they are grouped into A and B, some may fall into group A, while some others in B. Statistical analyses would allow to predict these grouping in probabilities, but it is impossible to predict the trajectory of individual measurements whether they would veer toward A or B. In other words, the scattering in the images can be predicted in generalized statistical terms – that would yield the wave pattern, but the individual positioning is unpredictable. This is indeed what the Born Rule (M Born, 1882 – 1970, German physicist and mathematician) says, that the description given by the wave function is probabilistic. The confirmation of wave-like behavior immediately suggests that the radiation must follow the wave transformation processes of classical physics – refraction, diffraction and superposition. The QM principles were summarized by Bohr and Heisenberg for ordinary comprehension, what is known as the Copenhagen Interpretation. It upholds: the wave-quanta duality; the influence of and the interaction with the laboratory observation on the behavior of minuscules; the probabilistic behavior of the wave function; and the correspondence principle. . . . Well so far so good. But there appeared a problem – observations have indicated that when particles or photons are generated, if one measures the state of photon (let us say A) as 1 – the behavior of another photon (say B) immediately responds to a state as 0. In other words, no matter how far the particles are apart – the response of B is immediate but opposite to the state of A. This has raised a serious question because the simultaneity of states must happen by the radiated wave traveling faster than the speed of light – thus denying the theory of relativity (that limits the speed of EM radiation to that of light). The inconsistency is addressed in a paper published in 1935 by Einstein, B Podolsky (1896 – 1966) and N Rosen (1909 – 1995); and is known as the EPR paradox. The paradox indicated the most intriguing and mysterious world of QM – at the cost of denying the completeness of the QM theory. Shortly afterwards, Schrödinger addressed the same problem in a paper published in 1935 – coining the phenomenon as Quantum Entanglement (QE). But the paradoxical problem did not disappear – how best to explain it? It was the Irish physicist, JS Bell (1928 – 1990) who came up with measurements and formulations in 1964, to say that one of the assumptions of the idea of paradox must be false – or that the two assumptions - one of reality (that microscopic objects have real properties) and the other of locality (that simultaneous measurements at distant locations cannot influence each other) cannot be true at the same. His mathematical argument is known as Bell’s Inequality. The Heisenberg principle, Born rule and Bell’s inequality – all confirm the stochastic or probabilistic nature of QM – and perhaps by the argument of correspondence extends to all Natural phenomena. What is the implication of QE? Well the implication is huge – starting from telepathy and teleportation to quantum encryption and computing. Although not yet proven scientifically, the phenomenon of telepathy seems to exist and people experience it sometime (see the Power of Mind piece on the SOCIAL INTERACTION page). Again not proven scientifically, but teleportation is the subject of some science fiction movies such as STAR TREK and STAR WARS. Many religious scriptures talk about gods and goddesses shuttling between heaven and earth instantly. Popular beliefs say that the Buddha used to display that ability – such as the story of appearing out of nowhere between a rogue son (Angulimala was aiming at killing his mother to get her finger as a payment (dakshina) to his guru’s wish) and his mother – to save them both. But most importantly very active research and technological development in Qubit computation and encryption are progressing fast. A simple explanation of this area of work is like this: qubit is a method of using 0, 1 and the combinations of both for fast computing and storage; and entanglement ensures their instant appearance somewhere else – at a long distance such as in space. But the probabilistic processes of QM would allow encryption to ensure secured flow of information. More in the National Academies Publication NAP 25196. . . . A piece on the Quantum World remains incomplete without providing a glimpse on the Quantum Field Theory (QFT) and Hawking Radiation, HR (1974, Stephen Hawking). Before saying more about them, let us attempt to understand what a Force or Energy Field means. A force field refers to a certain dominant parameter within a system which interacts with others – or influences them or is influenced by them. Perhaps an easily understandable simplest example from a Coastal Civil Engineer’s perspective – is a Coastal System (see Civil Engineering on our Seashore) where a wave force field interacts with that of a current. The force field theory was first introduced by Michael Faraday (1791 - 1867) in 1845. Apart from the Thermodynamic Force Field (TDFF), and the Bio-electricity Force Field (BEFF), some examples of force fields that are characterized by invisible (but measurable) forces are – the Magnetic Force Field, the Electric Force Field, the Electromagnetic Force Field, EMFF and the Gravitational Force Field, GFF (Isaac Newton and Albert Einstein; see Einstein’s Unruly Hair). Among these, EMFF and GFF are grouped together in the Classical Field (CF) Theory envelope. The QFT term (Quantum Electrodynamics, to be exact) is credited to have been first introduced by PAM Dirac (1902 – 1984) in 1927 – with further refinement at later times by other investigators. A Quantum Field, QF is defined as – and encompasses the force elements of CF, Special Relativity (see Einstein’s Unruly Hair) and QM (e.g. wave-particle Duality, Uncertainty and Entanglement). With this knowledge, now let us attempt to understand HR. We have seen in the Einstein’s Unruly Hair how Black Holes warp spacetime creating the Event Horizons around them. Not proven yet, Hawking Radiation or Black Hole Thermal Radiation is defined as the radiation in QF – outward from the Black Holes within the periphery of the horizon (see also Hawking’s Black Hole Entropy in Entropy and Everything Else). One important implication of HR is that overtime (far far in the future) Black Holes are predicted to lose their masses or energy (immense gravitational pull) through such processes of radiation or evaporation. . . . The principles of QM and QE drive us back again to some ancient metaphysics. One of the profound realities describing the law of Paticca Samupadha or Dependent Origination taught by the Buddha (The Tathagata) says that there cannot be independent state of existence – in other words, all states are interdependent – just as the principle QE says. The lack of independence together with the law of Impermanence or Anicca - leads to another important conclusion – and it is the reality of Sunyata or emptiness of substance in any conceivable state of existence. It says that one cannot separate things in space or time to claim that they definitely exist independent of one another. This and other Buddhist metaphysics including the duality non-duality principle of Nagarjuna (150 – 250 CE) are some of the reasons why QM and Buddhism have become an active area of research. One particular area of focus is the Avatamsaka Sutra (or the Flower Garland Sutra). The sutra directs one to the Four Faces of the Dharmakaya or the Universal Law: the duality of all existence; the mutuality or entanglement of the two; and the complementarity or collaborativeness of all such dual entities. Thus one’s realization is materialized by multidimensional interactions – therefore is Sunya or empty of essence by itself. The sphere of Natural philosophy or science began splitting from philosophy in the 19th century – with further splits occurring across disciplines as the horizon of knowledge continues to expand. In the end they must coalesce however – not in amalgamation of disciplines – but in yielding something unified – as a theory or law that could explain everything. Different correspondence theories have already been proposed or are emerging in different fields – someday a super-correspondence theory is likely to appear. Ultimately, it is all about energies – how they flow from one state to another – and how they transform things in the process. Some of it are common knowledge already – but still there remain more questions to be answered. I am tempted to finish this piece by delving into the epistemic definition of knowledge – that developed in many early philosophies and religions around the world. Dharmakirti (600 – 660 CE) – a Buddhist monk and scholar at Nalanda University (world’s 2nd earliest university; 5th – 12th century CE), while expounding upon the Buddha’s teaching – has explained that knowledge is complete only when our queries are satisfied – through the systematic processes that have three elements: it starts with curiosity and concept (anumana) – then to analytical and intellectual reasoning to understand it (pratyaksa) – and must end with valid proofs (pramana) or verifications of the concept. Such processes give a Natural philosopher or scientist the strength to defend his or her findings – the most famous example is the quiet but defiant mumbling of Galileo Galilei (1564 – 1642): and yet it moves. It is popularly believed that this has happened – while Galileo was pressured and harassed by Papal Theocracy to denounce the Copernican (Nicolaus Copernicus, 1473 – 1543) theory that says: Earth is not stationary and is not at the center of the universe. . . . . . - by Dr. Dilip K. Barua, 14 March 2019

0 Comments

Leave a Reply. |

RSS Feed

RSS Feed