. . . A problem can be solved only when we approach it thus. We cannot approach it anew if we are thinking in terms of certain patterns of thought, religious, political or otherwise. So we must be free of all these things, to be simple. That is why it is so important to be aware, to have the capacity to understand the process of our own thinking, to be cognizant of ourselves totally; from that there comes a simplicity . . . These are the lines of wisdom written by Jiddu Krishnamurti (1895 – 1986) in an essay on Simplicity in his book, The First and Last Freedom (HarperCollins 1975). The lines indicate something very important: that sticking to, or dependence on a priori notion or knowledge complicates a problem. It is like the aptly worded Zen Buddhist teaching: empty the mind to see things as they are. Or in Steve Jobs (1955 – 2011) words (his 1998 interview with the Businessweek): simple can be harder than complex: you have to work hard to get your thinking clean to make it simple. But it’s worth it in the end because once you get there, you can move mountains. Knowledge is important to the degree of making it part of one’s intuition or transformative experience – once done, its use must not cloud cognitive processes. To address a problem as is (the process is generally described in Buddhism as – seeing things through the Purities of Mind and View), the nature of it must be broken down into simple fundamental pieces. The understanding of fundamentals lets things unfold naturally as they are - somewhat like what is generally known as the Reductionist approach. One more reason for doing so is that the observer, the subject (the problem solver) must free himself or herself from affecting the observed, the object (things associated with the problem; see The Quantum World) or projecting his or her own personal pattern of thoughts upon the observed. How are all these relevant for a system such as Artificial Intelligence (AI)? Can AI reach the high level of intelligence to break down its sphere of activities (that in many cases, represents the science of complex systems incorporating human, Natural and Social processes and issues) to simple terms – to not cloud its cognitive processes? Answers to these questions are important for one to clearly comprehend the potentials, limits and consequences of many AI-Powered Products and Services (AIPPS). Before answering, let us examine the premise further from a different but practical angle. An individual using AI-powered appliances (e.g. some smart gadgets) – faces different dimensions of reality – some are outright good, others are questionable. These appliances are flooding the market – and we have no options other than to getting used to them. A smart phone, a search engine know what an individual’s behaviors are, and modulate the uses and activities accordingly (with the notion to help users!). To do that, AI on these devices must be monitoring user activities to fit them into patterns or define a new one – often with the purpose to profiling and casting them into known statistics (a priori knowledge) – Bayesian or otherwise (see The World of Numbers and Chances). Many of these practices are utterly annoying and even damaging, encroaching upon people’s privacy and what not. Although I have mentioned the two devices as an example – the practices and concerns are ubiquitous in many spheres of AIPPS. When one thinks about the growing amount of frustrations that are upon us – one cannot avoid asking:

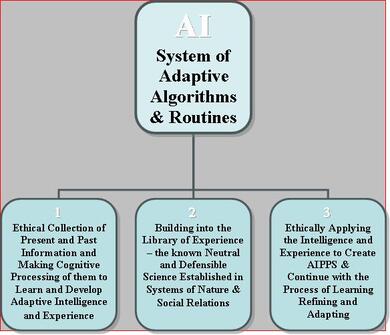

An alarming aspect of it – that has been and is being fueled by cyberspace activities – is the proliferation of the process of Vigilantism (the acts of investigation, enforcement and punishment without legal authority) across the globe. It is not difficult to imagine – that the process of self-appointed-policing carrying the agenda of ulterior motives – is responsible for destroying many people’s lives and livelihoods with virtual impunity. One should not be surprised if it appears – that impersonation, extra-judicial surveillance, lynching and other heinous activities – in zeal and intensity shaming even the medieval and past historical atrocities – are in action targeting mostly the minority communities. One may cry for rule-of-law, democratic or otherwise – but no governing institution/authority appears powerful enough – or using the process for its own purposes – or is feeling the responsibility to stop the process. As an answer to the last question, it is important to realize that AI developers and industries – for that matter all businesses prefer to be self-regulated – it is the mantra in any society (the enormous boost to this mantra came in the 1980s during the rules of British PM MH Thatcher, 1925 – 2013; and American President RW Reagan, 1911 – 2004; the boost has raised the level of social inequity that has only mushroomed profusely in present times. Further, funding to research institutes and universities was given a different approach and focus; gov funding catering to neutral research was let to drain out by giving the system a boot. Instead funding from corporations and special interest groups was encouraged – thus introducing a biased system to augment the business-interests of private entities). But they also want to have some form of rules and regulatory framework – otherwise, it is difficult to do business in the chaotic world of an unruly jungle. While they want as such, industries and businesses are, as well, afraid of over-regulation – regulators overreaching their power and mandate (some manifest as the sources of bureaucratic corruption and hurdles), thus inhibiting the thrival, creativity and business innovations. But well-thought-out guidelines and directions are good for all businesses (as they hinder the growth and survival of unscrupulous businesses). {For clarity of understanding: a regulation represents a set of rules and guidelines that are formulated and exercised by a government oversight agency. They come in the form of codes and by-laws, and all participating entities for whom the regulations apply or intended, must abide by them}. There we are. While thinking of some questions we are forced to ask more questions – in particular, on ethics and security grounds. It is reasonable to say that at this time, AI world is rather unruly, focused mostly on money-making at the cost of everything else – with no clear and specific regulatory ethical directions to limit and control some AIPPS. Societal leadership is a failure in that respect – unable or unwilling to understand and deal with the harmful consequences. However, on the backdrop of these crucial aspects, there are also some AIPPS that are outright beneficial – such as: a smart faucet closing-off the valve if not in use for sometime, or a smoke alarm switching on the sprinkler to prevent fire hazard. People must appreciate many such available or potential good AIPPS, but must also be wary of bad ones. Perhaps the power of AI was first demonstrated in the chess championship between a computer and a chess grandmaster. This happened in 1996 and 1997 when the IBM computer Deep Blue was able to defeat the then chess grandmaster Garry Kasparov (1963 - ), first in one game – and then in the whole six-game match in 1997. Today, many game apps are on the market – where a ghost AI player can observe the human end-user’s playing strategy; learn from such moves and adapt to developing counter-strategy like a worthy opponent. Let us attempt to understand all these issues in simple terms. It is an attempt by a non-AI professional – but a keen observer – trying to delve into the basics and application issues of AI – let’s say – from a different perspective. As shown in the attached image, to make things simple – I have attempted to break down the AI system into three fundamental but interconnected phases or subsystems. Some of it represents what people aspires AI-system to be – rather than what it is at present. Missing from these phases are the roles of human mind. The roles cover such mental faculties as – curiosity, imagination, inspiration, creativity and sublime qualities (see The All-embracing Power of Sublimities). These attributes are as much a function of intelligence as of the mind (see The Power of Mind). A human - for all different reasons - may rise up at one time or other, for example, saying - enough is enough, let's forget all these, let me try to clean my thought processes, think plain, straight and simple to get to the bottom of this. Can AI do this like a human? The reality that some of the mental faculties may never be replicated by AI (even if it does replicate, it will be something else – not the mind as we know it), precluded the inclusion of mind phenomena in the image. In the Natural Equilibrium piece I have written: human mind is such that no two individuals act or react in the same way to identical stimulus. Even if the reactions can be cast into statistical patterns – some degree of uncertainties will always define them. This piece is laid out by first browsing through some of AI basics, then revisiting our common understanding of ethics, morals and laws. While elaborating those – I have also tried to spend sometime to discuss/explore some AI-powered applications in the applied physics of civil engineering. Drawing up this piece is made possible – by information gathered from different websource articles and essays, written by many authors – it is as much of a learning experience as of a presentation. . . . AI in a Nutshell

Ethics, Morals and Laws Ethical aspects of AI system are highlighted twice in the attached image. The prevailing level of ethics, morals and laws define the standard of a society one lives in (see Social Order) – and many of them are not yet the integral part of the present-day AI algorithms. But I have included them – to stress that they should be, or required to be built into the AI systems. Because by not doing so, the vendors, users and society at large are being lured to conduct activities that are not always compatible or in sync with ethics, morals and laws. There seems to be a lack of thorough and comprehensive evaluation of the magnitude and extent of risks associated with unethical behaviors and damaging impacts. This is especially relevant for many social media and internet search engines that are already occupying most of our times. Started in the nineties, the internet communication platforms totally revolutionized the way we communicate and do business. But the platform is also being abused, both by the owners and users (in the mass media such as TV and radio networks, only the owners or broadcasters can abuse the system). Many of the ethics and regulation questions discussed earlier also apply to the internet IP addresses. With this, let us browse through some known definitions of ethics, morals and laws.

AI and Civil Engineering AI routines and algorithms that can think almost like a civil engineering (CE) professional, are in different stages of research and development. AIPPS in CE sectors have the potentials to enhance the capabilities of analysis and computation, streamlining performance, and helping with examining and screening the solutions of a particular problem. A repeat of cautions pointed out earlier, is warranted here: AIPPS are as good as (or as bad as) the resources they utilize, therefore there have to be some form of CE judgmental checks on the AIPPS performance. We often hear about an acronym GIGO - Garbage In Garbage Out. Let me attempt to briefly outline some AI applications in the broad arena of civil engineering – that are and will potentially enhance the capabilities of Turning the Wheel of Progress in the forward direction.

The other part of the answer can simply be thought as: humans have a natural affinity for automation (although AI is more than automation), thinking that some of our difficult (even undesirable) activities can be done by someone else. As deplorable as they are, during the colonial and medieval times, this gave birth to serfdom and slavery. During the beginning of industrialization, electrical-mechanical automated production lines, and streamlined distribution/marketing systems appeared. Therefore, it is safe to say that we like comfort and like to enjoy the fruit of hard labor done by someone or something else – AI provides that opportunity. But AI has also an obligation not to do things – at the cost of foregoing due diligence on consequences, and shunning away from responsibility. The answer to the second question is perhaps not yes. Well, not yet. Although the long-term consequences of dependence on AI may prompt one to say otherwise at sometime in the future. In addition, people questioned about the possibility of some future grim scenarios – e.g. whether or not meaningful human engagement in jobs and others can be jeopardized. No one wants to think of such a scary long-term harmful consequence now – but something that may haunt humanity in the future. . . . Supports of many discussed AI issues – have gotten further clarity and explanation in the 2022 NAP Publication #26355 – Human-AI Teaming. This publication sheds light on some highly likely limitations and Human-AI teaming interactions – that an AI developer must address and pay attention to. Although, the document is developed for the defense establishments – the discussions and conclusions the authors came up with – are insightful and applicable, by and large, to all different areas of AIPPS in different degrees. Limitations: (1) Brittleness: AI will only be capable of performing well in situations that are covered by its programming . . . ; (2) Perceptual limitations: Though improvements have been made, many AI algorithms continue to struggle with reliable and accurate object recognition in “noisy” environments, as well as with natural language processing . . . ; (3) Hidden biases: AI software may incorporate many hidden biases that can result from being created using a limited set of training data, or from biases within that data itself . . . ; (4) No model of causation: . . . Because AI cannot use reason to understand cause and effect, it cannot predict future events, simulate the effects of potential actions, reflect on past actions, or learn when to generalize to new situations. Causality has been highlighted as a major research challenge for AI systems . . . Human-AI Teaming Interaction: (1) Automation confusion: “Poor operator understanding of system functioning is a common problem with automation, leading to inaccurate expectations of system behavior and inappropriate interactions with the automation” . . .; (2) Irony of automation: When automation is working correctly, people can easily become bored or occupied with other tasks and fail to attend well to automation performance . . .; (3) Poor SA and out-of-the-loop performance degradation: People working with automation can become out-of-the-loop, meaning slower to identify a problem with system performance and slower to understand a detected problem . . . SA refers to Situation Awareness; (4) Human decision biasing: Research has shown that when the recommendations of an automated decision-support system are correct, the automation can improve human performance; however, when an automated system’s recommendations are incorrect, people overseeing the system are more likely to make the same error . . .; (5) Degradation of manual skills: To effectively oversee automation, people need to remain highly skilled at performing tasks manually, including understanding the cues important for decision making. However, these skills can atrophy if they are not used when tasks become automated . . . Further, people who are new to tasks may be unable to form the necessary skill sets if they only oversee automation. This loss of skills will be particularly detrimental if computer systems are compromised by a cyber attack . . . , or if a rapidly changing adversarial situation is encountered for which the automation is not suited . . . . . . Attempts to highlight and discuss various aspects of AI facts, concerns and recommendations – have made this piece a long one. These aspects are worth paying attention to – especially on the paradigm of dissecting them to simple terms. And most of the discussed ethical concerns – are similarly applicable to different seats of power – e.g. corporate empires and government entities (see Governance; Democracy and Larry the Cat). Perhaps some concluding remarks are helpful.

. . . The Koan of this piece: I listen I read I see I learn I talk I write I do I teach I lead I want to learn more I investigate I imagine I create. . . . . . - by Dr. Dilip K. Barua, 22 January 2021

4 Comments

|

RSS Feed

RSS Feed