Science and technology

working with nature- civil and hydraulic engineering to aspects of real world problems in water and at the waterfront - within coastal environments

One must have guessed what I intend to discuss in this piece. People are glued to numbers in one way or another – for the sake of brevity let us say, from the data on finance, social demography and income distribution – to the scientific water level, wave height and wind speed. People say there is strength in numbers. This statement is mostly made to indicate the power of majority. But another way to examine the effectiveness of this statement is like this: suppose Sam is defending himself by arguing very strongly in favor of something. If an observer makes a comment like this, well these are all good, but the numbers say otherwise. This single comment has the power to collapse the entire arguments carefully built by Sam (unless Sam is well-prepared and able to provide counter-punch), despite the fact that numerical generalizations are invariably associated with uncertainties. Uncertainty is simply the lack of surety or absolute confidence in something. . . . While the numbers have such powers, one may want to know:

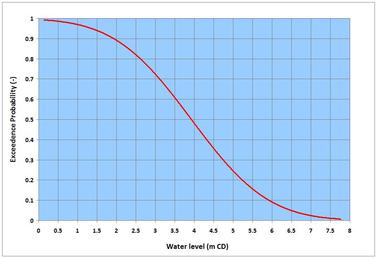

Probability with its root in logic is commonly known as probability distribution because it shows the distribution of a statistical data set – a listing of all the favorable outcomes, and how frequent they might occur (as a clarification of two commonly confused terms: probability refers to what is likely to happen – it denotes the surety of a happening but unsurety in the scale of its likelihood; while possibility refers to what might happen but is not certain to – it denotes the unsurety of a happening). Both of these methods aim at turning the information conveyed by numbers or data into knowledge – based on which inferences and decisions can be made. Statisticians rely on tools and methods to figure out the patterns and messages conveyed by numbers that may appear chaotic in ordinary views. The term many times originates from the Theory of Large Numbers. Statisticians say that if a coin is tossed for a short period, for instance 10 times – it may yield let us say, 7 heads (70% outcome) and 3 tails (30% outcome); but if tossed many more times, the outcomes of the two possibilities, head and tail is likely to be 50% each – the outcomes one logically expects to see. Following the proof of this observation by Swiss mathematician Jacob Bernoulli (1655 – 1705), the name of the theory was formally coined in 1837 by French mathematician Simeon Denis Poisson (1781 – 1840). There is a third aspect of statistics – it is known as the Statistical Mechanics (different from ordinary mechanics that deals with one single state) that is mostly used by physicists. Among others, the system deals with equilibrium and non-equilibrium processes, and Ergodicity (the hypothesis that the average over long time of a single state is same as the average of a statistical ensemble – an ensemble is the collection of various independent states). . . . A few lines on the random and systematic processes. They can be discussed from the view points of both philosophical and technical angles. Randomness or lack of it, is all about perception – irrespective of what the numbers say, one may perceive certain numbers as random while others may see them differently. In technical terms, let me try to explain through a simple example. By building upon the Turbulence piece on the NATURE page, one can say that flow turbulent randomness appears when measurements tend to approximate to near-instantaneous sampling. Let us say, if one goes to the same spot again to measure turbulence under similar conditions; it is likely that the measurements would show different numbers. If the measurements are repeated again and again, a systematic pattern would likely emerge that could be traced to different causes – but the randomness and associated uncertainties of individual measurements would not disappear. Something more on the randomness. The famous Uncertainty Principle proposed by German theoretical physicist Werner Karl Heisenberg (1901 – 1976) in 1926 changed the way science looks at Nature. It broke the powerful deterministic paradigm of Newtonian (Isaac Newton, 1642 – 1727) physics. The principle says that there can be no certainty in the predictability of a real-world phenomenon. Apart from laying the foundation of Quantum Mechanics, this principle challenges all to have a close look at everything they study, model and predict. Among others, writing this piece is inspired after reading the books: A Brief History of Time (Bantam Books 1995) by British theoretical physicist Stephen Hawking (1942 - 2018); Struck by Lightning – the Curious World of Probabilities by JS Rosenthal (Harper Collins 2005); the 2016 National Academies Press volume: Attribution of Extreme Weather Events in the Context of Climate Change; and the Probability Theory – the Logic of Science by ET Jaynes (Cambridge University Press 2003). A different but relevant aspect of this topic – Uncertainty and Risk was posted earlier on this page indicating how decision making processes depend on shouldering the risks associated with statistical uncertainties. On some earlier pieces on the NATURE and SCIENCE & TECHNOLOGY pages, I have described two basic types of models – the behavioral and the process-based mathematical models – the deterministic tools that help one to analyze and predict diverse fluid dynamics processes. Statistical processes yield the third type of models – the stochastic or probabilistic models – these tools basically invite one to see what the numbers say to understand the processes and predict things on an uncertainty paradigm. While the first two types of models are based on central-fitting to obtain mean relations for certain parameters, the third type looks beyond the central-fitting to indicate the probability of other occurrences. . . . Before moving further, a distinction has to be made. What we have discussed so far is commonly known as the classical or Frequentist Statistics (given that all outcomes are equally likely, it is the number of favorable outcomes of an event divided by the total outcomes). Another approach known as the Bayesian Statistics was proposed by Thomas Bayes (1701 – 1761) – developed further and refined by French mathematician Pierre-Simon Laplace (1749 – 1827). Essentially, this approach is based on the general probability principles of association and conditionality. Bayesian statisticians assume and use a known or expected probability distribution to overcome, for instance, the difficulties associated with the problems of small sampling durations. It is like infusing an intuition (prior information or knowledge) into the science of presently sampled numbers. [If one thinks about it, the system is nothing new – we do it all the time in non-statistical opinions and judgments.] While the system can be advantageous and allows great flexibility, it also has room for manipulation in influencing or factoring frequentist statistical information (that comes with confidence qualifiers) in one way or another. . . . Perhaps a little bit of history is desirable. Dating back from ancient times, the concept of statistics existed in all different cultures as a means of administering subjects and armed forces, and for tax collection. The term however appeared in the 18th century Europe as a systematic collection of demographic and economic data for better management of state affairs. It took more than a century for scientists to formally accept the method. The reason for such a long gap is that scientists were somewhat skeptical about the reliability of scattered information conveyed by random numbers. They were more keen on robust and deterministic aspects of repeatability and replicability of experiments and methods that are integral to empirical science. Additionally, scientists were not used to trust numbers that did not accompany the fundamental processes causing them. Therefore, it is often argued that statistics is not an exact science. Without going into the details on such arguments, it can be safely said that many branches of science including physics and mathematics (built upon theories, and systematic uncertainties associated with assumptions and approximations) also do not pass the exactitude (if one still believes this term) of science. In any case as scientists joined, statistical methods received a big boost in sophistication, application and expansion (from simple descriptive statistics to many more advanced aspects that are continually being refined and expanded). Today statistics represents a major discipline in Natural and social sciences; and many decision processes and inferences are unthinkable without the messages conveyed or the knowledge generated by the science of numbers and chances. However, statistically generalized numbers do not necessarily tell the whole story, for instance when it comes down to human and social management – because human mind and personality cannot simply be treated by a rigid number. Moreover, unlike the methods scientists and engineers apply, for instance, to assess the consequences and risks of Natural Hazards on vulnerable infrastructure – statistics-based social decisions and policies are often biased toward favoring the mean quantities or majorities at the cost of sacrificing the interests of vulnerable sections of the social fabric. When one reads the report generated by statisticians at the 2013 Statistical Sciences Workshop (Statistics and Science – a Report of London Workshop on the Future of Statistical Sciences) participated by several international statistical societies, one realizes the enormity of this discipline encompassing all branches of Natural and social sciences. Engineering and applied science are greatly enriched by this science of numbers and chances. . . . In many applied science and engineering practices, a different problem occurs – that is how to attribute and estimate the function parameters for fitting a distribution in order to extrapolate the observed frequency (tail ends of the long-term sample frequencies, to be more specific) to predict the probability of an extreme event (which may not have occurred yet). The applied techniques for such fittings to a distribution (ends up being different shapes of exponential asymptotes) of measurements are known as the extremal probability distribution methods. They generally fall into a group known as the Generalized Extreme Value (GEV) distribution – and depending on the values of location, scale and shape parameters, they are referred to as Type I (or Gumbel distribution, German mathematician Emil Julius Gumbel, 1891 – 1966), Type II (or Fisher-Tippett distribution, British statisticians Ronald Aylmer Fisher, 1890 – 1962 and Leonard Henry Caleb Tippett, 1902 – 1985) and Type III (or Weibull distribution, Swedish engineer Ernst Hjalmar Waloddi Weibull, 1887 – 1979). This in itself is a lengthy topic – hope to come back to it at some other time. For now, I have included an image I worked on, showing the probability of exceedence of water levels measured at Prince Rupert in British Columbia. From this image, one can read for example, that a water level of 3.5 m CD (Chart Datum refers to bathymetric vertical datum) will be exceeded for 60% of time (or that water levels will be higher than this value for 60% of time, and lower for 40%). In extreme probability distribution it is common practice to refer to an event in recurrence intervals or return periods. This interval in years says that an event of a certain return period has the annual probability – reciprocal of that period (given that the sampling refers to annual maxima or minima). For example, in a given year, a 100-year event has 1-in-100 chance (or 1%) of occurring. Another distinction in statistical variables is very important – this is the difference between continuous and discrete random variables. Let me try to briefly clarify it by citing some examples. The continuous random variable is like water level – this parameter changes and has many probabilities or chances of occurring from 0 (exceptionally unlikely) to 1 (virtually certain). In many cases, this type of variables can be described by Gaussian (German mathematician Carl Freidrich Gauss, 1777 – 1855) or Normal Distribution. The discrete random variable is like episodic earthquake or tsunami events – which are sparse and do not follow the rules of continuity, and can best be described by Poisson Distribution. . . . When one assembles huge amounts data, there are some first few steps one can do to understand them. Many of these are described in one way or another in different text books – I am tempted to provide a brief highlight here.

. . . Before finishing I like to illustrate a case of conditional probability, applied to specify the joint distribution of wave height and period. These two wave properties are statistically inclusive and dependent; and coastal scientists and engineers usually present them in joint frequency tables. As an example, the joint frequency of the wave data collected by the Halibut Bank Buoy in British Columbia shows that 0.25-0.5 m; 7-8 s waves occur for 0.15% of the time. As for conditional occurrence of these two parameters, analysis would show that the probability of 7-8 s waves is likely 0.52% given the occurrence of 0.25-0.5 m waves; and that of 0.25-0.5 m waves is likely 15.2% given the occurrence of 7-8 s waves. Here is a piece of caution stated by a 19th century British statesman, Benjamin Disraeli (1804 – 1881): There are three kinds of lies: lies, damned lies, and statistics. Apart from bootstrapping, lies are ploys designed to take advantages by deliberately manipulating and distorting facts. The statistics of Natural sciences are less likely to qualify for lies – although they may be marred with uncertainties resulting from human error, data collection techniques and methods (for example, the data collected in the historic past were crude and sparse, therefore more uncertain than those collected in modern times). Data of various disciplines of social sciences, on the other hand are highly fluid in terms of sampling focus, size, duration and methods, in data-weighing, or in the processes of statistical analyses and inferences. Perhaps that is the reason why the statistical assessments of the same socio-political-economic phenomena by two different countries hardly agree, despite the fact that national statistical bodies are supposedly independent of any influence or bias. Perhaps such an impression of statistics was one more compelling reason for statistical societies to lay down professional ethics guidelines (e.g. International Statistics Institute; American Statistical Society). . . . . . - by Dr. Dilip K. Barua, 19 January 2018

0 Comments

I like to begin this piece with a line from Socrates (469 – 399 BCE) who said: I am the wisest man alive, for I know one thing, and that is that I know nothing. This is a philosophical statement developed out of deep realization – neither practical nor useful in the mundane hustle-bustle of daily lives and economic processes. Philosophers tend to see the world differently sometimes beyond ordinary comprehension – but something a society looks upon to move forward in the right direction. Scientists and engineers – for that matter any investigator, who explores deep into something, comes across this type of feeling nonetheless – the feeling that there appear more questions than definitive answers. This implies that our scientific knowledge is only perfect to the extent of a workable explanation or solution supported by assumptions and approximations – but in reality suffers from transience embedded with uncertainties. This piece is nothing about it – but an interesting aspect of actions and reactions between waves and structures. . . . One of the keys to understanding these processes – for that matter any dynamic equilibrium of fluid flow – is to envision the principle of the conservation of energy – that the incident wave energy must be balanced by the structural responses – the processes of dissipation, reflection and transmission. These interaction processes cause vortices around the structure scouring the seabed and undermining its stability. Let me share all these aspects in a nutshell. As done in other pieces – I will provide some numbers to give an idea what we are talking about. The materials covered in this piece are based on my experience in different projects; and on the materials described in: the Random Seas and Design of Maritime Structures (Y. Goda 2000); the 2002 Technical Report on Wave Run-up and Wave Overtopping at Dikes (The Netherlands); the 2006 USACE Coastal Engineering Manual (EM 1110-2-1100 Part VI); the 2007 Eurotop Wave Overtopping of Sea Defences and Related Structures (EUROCODE Institutes); the 2007 Rock Manual of EUROCODE, CIRIA (Construction Industry Research and Information Association) and CUR (Civil Engineering Research and Codes, the Netherlands); and others. . . . Most of the findings and formulations in wave-structure interactions and scour are empirical – which in this context means that they were derived from experimental and physical scale modeling tests and observations in controlled laboratory conditions – relying on a technique known as the dimensional analysis of variables. Although they capture the first order processes correctly, in real world problems the formulation coefficients may require judgmental interpretations of some sort to reflect the actual field conditions. Some materials relevant for this piece were covered earlier in the NATURE and SCIENCE & TECHNOLOGY pages. This topic can be very elaborate – and to manage it to a reasonable length, I will limit it to discussing some selected aspects of:

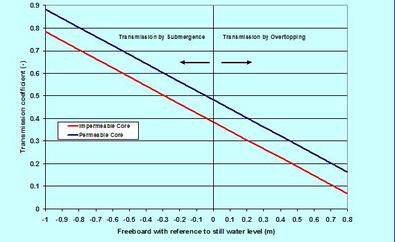

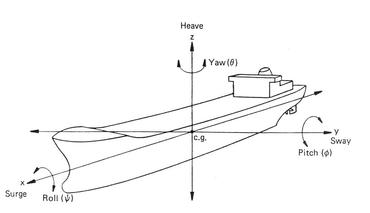

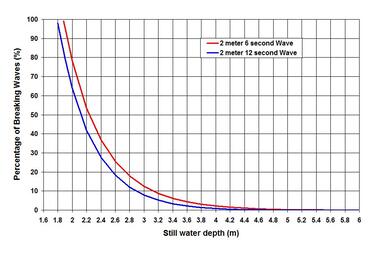

. . . What must one look for to describe the wave-structure interactions? Perhaps the first is to realize that only the waves with lengths (L) less than about 5 times the structures dimension (D) are poised to cause wave-structure interactions (see the Wave Forces on Slender Structures on this page) in the presence of slender structures. The second is that the wave energy must remain in balance – which translates to the fact that sum of squares of the wave heights (H^2) in dissipation, reflection and transmission must add to the incident wave height squared. This balancing is usually presented in terms of coefficients (the ratios of dissipated, reflected and transmitted wave heights to the incident wave height), squares of which (C^2) must add to one. The third is the Surf Similarity Number (SSN, discussed in The Surf Zone on this page) – this parameter appears in every relation where a sloping structure is involved – it is directly proportional to wave period and slope. The fourth is the direction of wave forcing relative to the loading face of the structure – its importance can simply be understood from the differences in interactions between the head-on and oblique waves. Importance of other structural parameters will surface as we move on to discussing the processes. . . . Wave Reflection. Wave reflection can be a real problem for harbors lined with vertical-face seawalls. It can cause unwanted oscillation and disturbance in vessel maneuvering and motion, and in the scouring of protective structure foundations. As one can expect, wave reflection from a vertical-face smooth structure is higher than and different from a non-overtopped sloping structure. A non-breaking head-on wave on a smooth vertical-face structure is likely to reflect back straight into the incident waves – an example of perfect reflection. When waves are incident at an angle on such a structure, the direction of the reflected waves follows the principle of optical geometry. For sloping structures, the reflection is directly proportional to SSN, and it can be better grasped from a relation proposed by Postma (1989). Let us see it through an example. A 1-m high wave with periods of 6-s and 15-s, propagating head-on, on a non-overtopped 2 to 1 straight sloping stone breakwater (with a smooth surface and an impermeable core) would produce reflected waves in the order of 0.36 m and 0.83 m, respectively. When the slope is very rough built by quarried rock, most of the incident energies are likely to be absorbed by the structure. It is relevant to point out that according to Goda (2000), natural sandy beaches reflect back some 0.25% to 4% of incident wave energies. . . . Wave Runup. Wave runup is an interesting phenomenon – we see it each time we are on the beach – not to speak of the huge runups that occur during a tsunami overwhelming us in awe and shock. The runup is a way for waves to dissipate its excess energy after breaking. Different relations proposed in literature show its dependence on wave height and period, the angle of wave incidence, beach or structure slope – and on the geometry, porosity and roughness. A careful rearrangement of different proposed equations would indicate that the runup as directly and linearly proportional to wave period and slope, but somewhat weakly to wave height. This is the reason why the runup of a swell is higher than a lower-period sea – or why a flatter slope is likely to have less runup than a steeper one – or why the tsunami runup is so huge. An estimate following the USACE-EM would show that the maximum runups on a 10 to 1 foreshore beach slope are 1.9 m and 3.8 m for a 1-m high wave with periods of 6-s and 15-s, respectively. The explanation of the runup behavior is that the longer the wave period, the less is its loss of energy during breaking, affording the runup process to carry more residual energy. Although a runup depends on its parent oscillatory waves for energy, its hydrodynamics is translatory, dominated by the laws of free surface flows – this means in simple terms – the physics of the steady Bernoulli (Daniel Bernoulli; 1700 – 1782) equation. . . . Wave Transmission. How does the wave transmissions over and through a maritime structural obstacle work? Such structural obstacles are called breakwaters because they block or attenuate wave effects in order to protect the areas behind them. There are two basic types – the fixed or rigid, and the floating breakwaters. The former is usually built as a thin-walled sheet pile, a caisson or as a sloped rubble-mound (built by quarried rocks or other manufactured shapes). The second, moored to seabed or fixed to vertical mono-piles, is usually built by floats with or without keels. The floating breakwaters are only effective in a coastal environment of relatively calm short period waves (the threshold maximum ≈ 4 s) – because long period waves tend to transmit easily with negligible loss of energy. The attenuation capacity of such breakwaters is often enhanced by a catamaran type system by joining two floats together. First let us have a glimpse of wave transmissions over a submerged and overtopped fixed breakwater. To illustrate this over a low-crested (structure crest height near the still water level – somewhat higher and lower) rubble-mound breakwater, an image is included showing the transmission coefficients (ratio of transmitted to incident wave height) for a head-on 1-m high 6-s wave, incident on the 2 to 1 slope of a breakwater with a crest width of 1 m. This is based on a relation proposed by researches at Delft (d’Angremond and others 1996) and shows that with other factors remaining constant, the transmission coefficient is linearly but inversely proportional to the freeboard. For both the permeable and impermeable cores, the image shows the high transmission by submergence (often termed as green overtopping) and low transmission by overtopping – with the permeable core affording more transmissions than a non-permeable one. Emergent and submergent with the changing tide level, such transmissions are directly proportional to wave height, period and stoss-side breakwater slope, but inversely proportional to the breakwater crest width. Wave transmission concept over a submerged breakwater is used to design artificial reefs to attenuate wave effects on an eroding beach. In another application, the reef layout and configuration are positioned and designed in such a way that wave focusing is stimulated. The focused waves are then led to shoal to a high steepness suitable for surfing – giving the name artificial surfing reef. The transmissions through a floating breakwater are complicated – more for loosely moored than a rigidly moored. As a rule of thumb, an effective floating breakwater requires a width more than half the wave length, or a draft at least half the water depth. To give an idea, an estimate would show that for a 1-m high, 4-s head-on incident wave on a single float with a width of 2 m and a draft of 2 m – rigidly moored at 5 m water-depth – the transmitted wave height immediately behind would be 0.55 m. If the draft is increased to 2.5 m by providing a keel, the transmitted wave heights would reduce to 0.45 m. The estimates are based on Weigel (1960), Muir Wood and Fleming (1981), Kriebel and Bollmann (1996) and Cox and others (1998). . . . Wave Overtopping. Wave overtopping is a serious problem for the waterfront seawalls installed for protecting urban and recreational areas from high storm waves. It disrupts normal activities and traffic flow, damages infrastructure and causes erosion. Among the various researches conducted on this topic, the Owen (1982) works at the HR Wallingford shows some insights into the overtopping discharge rates. Overtopping discharge rate is directly proportional to the incident wave height and period, but inversely to the freeboard (the height of the structure crest above still water level). To give an idea: for a 1 m high freeboard, 2 to 1 sloped structure – a 1-m high wave with periods of 6-s and 15-s would have an overtopping discharge of 0.10 and 0.82 m^2/s per meter width of the crest, respectively. If the freeboard is lowered to 0.5 m, the same waves will cause overtopping of 0.26 and 1.25 m^2/s/m. . . . Scour. Scouring of erodible seabed in the vicinity and around a structure results from the obstruction of a structure to fluid flows. The obstructed energy finds its way in downward vertical motions – in the nearfield vigorous actions and vortices scooping out sediments from the seabed. Scouring processes are fundamentally different from the erosion processes – the latter is a farfield phenomenon and occurs due to shearing actions. The closest analogies of the two processes are like this: the vortex scouring action is like a wind tornado – while the process of erosion is like the ground-parallel wind speed picking up and blowing sand. Most coastal scours occur in front of a vertical sea wall, near the toe of a sloping structure, at the head of a breakwater, around a pile, and underneath a seabed pipe. They are usually characterized by the maximum depth of scour (an important parameter indicating the undermining extent of scouring), and the maximum peripheral extent of scouring action. It turns out that these two scouring dimensions scale with wave height, wave period, water depth and structural diameter or width. These parameters are lumped into a dimensionless number known as the Keulegan-Carpenter (KC) Number (a ratio of the product of wave period and nearbed wave orbital velocity to the structure dimension), proposed by GH Keulegan and LH Carpentar (1958). This number was introduced in the Wave Forces on Slender Structures piece on this page. Experimental investigations by Sumer and Fredsøe (1998) indicate that a scour hole around a vertical pile develops only when KC > 6. At this value, wave drag starts to influence the structure adding to the inertial force, and scouring action continues to increase exponentially as the KC and structure diameter increase. Scour prevention is mostly implemented by providing stone ripraps – of suitable size, gradation and filter layering. . . . The Koan of this piece: If you do not respect others – how can you expect the same from them. . . . . . - by Dr. Dilip K. Barua, 20 October 2017  This topic represents one of the most interesting problems for port terminal installations – or in a broader sense for station keeping or tethering of floating bodies such as a ship (vessel) or a floating offshore structure. The professionals like Naval Architects and some Maritime Hydraulic Civil Engineers are trained to handle this problem. I had the opportunity to work on some projects that required the static force equilibrium analysis for low frequency horizontal motions, and the dynamic motion analysis accounting for the first order motions in all degrees of freedom. They were accomplished through modeling efforts to configure terminal and mooring layouts, and to estimate restraining forces on mooring lines and fenders for developing their specifications. Let me share some elements of this interesting topic in simple terms. To manage this piece to a reasonable length - some other aspects of ship mooring such as its impacts on structures during berthing are not covered. Hope to get back to this and other aspects at some other time. This piece can appear highly technical, so I ask the general readers to bear with me as we go through it. It is primarily based on materials described by American Petroleum Institute (API), Oil Companies International Marine Forum (OCIMF), The World Association for Waterborne Transport Infrastructure (PIANC), British Standard (BS), the pioneering works of JH Vugts (TU Delft 1970) and JN Newman (MIT press 1977), and others. Imagine an un-tethered rigid body floating in water agitated by current, wave and wind. These three environmental parameters will try to impose some motions on the body – the magnitudes of which will depend on the strength and frequency of the forcing parameters – as well as on the inertia of the body resisting the motion and on the strength of restoring forces or stiffness. . . . Before moving into discussing the motions further, a few words on current, wave and wind are necessary. Some of these environmental characteristics were covered in different pieces posted on the NATURE and SCIENCE & TECHNOLOGY pages. Among these three, currents caused by long-waves such as tide, are assumed steady because their time-scales are much longer than the ship motions. Both wave and wind, on the other hand are unsteady – and spectromatic in frequency and direction – causing motions in high (short period) to low (long period) frequency categories. In terms of actions, the ship areas below the water line are exposed to current and wave actions – for wind action it is the areas above the water line. Often the individual environmental forcing on the ship’s beam (normal to the ship’s long axis) proves to dominate the directional loading scenario. But an advanced analysis of the three parameters is required in order to characterize them as the combined actions from the perspectives of operational and tolerance limits – and for design loads acting on different loading faces of the ship. The acceptable motion limits vary among ships accounting for shipboard cargo handling equipments and safe working conditions. Some simple briefs on the oscillation dynamics. When a rigid body elastic system is forced to displace from its equilibrium position, it oscillates in an attempt to balance the forces of excitation and restoration. The simplest examples are: the case of vertical displacement when a mass is hanged by a spring, and the case of angular displacement of a body fixed to a pivot. When the forcing excitation is stopped after the initial input, an elastic body oscillates freely in exponentially diminishing amplitude and frequency. A forced oscillation occurs when the forcing continues to excite the system – in such cases resonance could occur. . . . The natural frequency (or reciprocally the period) of a system is its property and depends on its inertial resistance to motion, and on its strength to restoring it to equilibrium. The best way to visualize it is to let the body float freely in undamped oscillations. It turns out that the natural period is directly proportional to its size or its displaced water mass. This means that a larger body has a longer natural period of oscillation than a smaller one. Understanding the natural period is very important because if the excitation coincides with the natural period, resonance occurs causing unmanageable amplification of forces. In reality however, resonance rarely occurs because most systems are damped to some extent. Damping reduces the oscillation amplitude of a body by absorbing the imparted energy – partially (under-damped), or more than necessary (over-damped), or just enough to cause critical damping. Most floating systems are under-damped. Force analysis of an over-damped body requires an approach different from the motion analysis. . . . Here are some relevant terms describing a ship. A ship is described by its center-line length at the waterline (L), beam or width B (the midship width at the waterline), the draft D (the height between the waterline and ship’s keel), the underkeel clearance (the gap between the ship’s keel and the seabed), the fully loaded Displacement Tonnage (DT) – accounting for displacement of the ship with DWT (Dead Weight Tonnage – the displaced mass of water at the vessel’s carrying capacity – accounting for cargo, fuel, water, crew [including passengers if any] and food item storage), and the Lightweight (empty) Weight Tonnage (LWT). A vessel is known as a ship when its tonnage DWT is 500 or more. The ship dimensions are related to one another in some fashion allowing to making estimates of others if one is known. A term known as the block coefficient (CB) represents the fullness of the ship – it is the ratio of the ship’s actual displaced volume and its prismatic or block volume (with L, B and D). The typical CBs are 0.85 for a tanker and 0.6 for a ferry. To give an idea, the new Panamax vessel (maximum allowed through the new Panama Canal Lock) is L = 366 m; B = 49 m, and D = 15 m. Different classification societies like ABS (American Bureau of Shipping) and LR (Lloyd’s Register of Shipping) set the technical standards for the construction and operation of ships and offshore floating structures. . . . What are some of the basic rigid body motion characteristics? The floating body motions occur in six degrees of freedom representing linear translational and rotational movements. Literature describes the six motion types in different ways; perhaps a description relying on the vessel’s axes is a better way of visualizing them. All the three axes – the horizontal x-axis along the length, the horizontal y-axis across the width, and the vertical z-axis originate at the center of gravity (cg) of the vessel. To illustrate them I have included a generic image (credit: anon) showing the motions referring to:

. . . Another important understanding needs to be cleared. This is about the stability or equilibrium of the vessel for inclinational motions. A floating body is stable, when its centers of gravity (cg) and buoyancy (cb) lie on the same vertical plane. When this configuration is disturbed by environmental exciting forces like wind and wave, or by mechanical processes like imbalanced loading and unloading operations, the vessel becomes unstable shifting the positions of cg and cb. The imbalanced loading can only be restored to equilibrium by the vessel operators by re-arranging the cargo. Among others, the vessel operators also have the critical responsibility to leave the berth during a storm, to tending the mooring lines – so that all the mooring lines share the imposed loads – and in keeping the ship within the berthing limit. For non-inclinational motions like surge, sway, heave and yaw the coincidental positions of the cg and cb are not disturbed. They just translate back and forth in surge, and near and far in sway oscillations. In heave and yaw, the coincidental positions of cg and cb do not translate – for heave it is the vertical up and down motion, and for yaw it is the angular translatory motion about the vertical axis. . . . How to describe these motions and the corresponding forces in mathematical terms? The description as an equation is conceived in analogous with the equilibrium principle of a spring-mass system – where an oscillating exciting force causes acceleration, velocity and translation of the floating body. For each of the six degrees of freedom, an equation can be formed totaling six equations of motion. With all the six degrees of freedom active, the problem becomes very formidable to solve analytically; the only option then is to resort to numerical modeling technique. Motion analyses are conducted by two different approaches – the Frequency Domain analysis focuses on motions in different frequencies, adding them together as a linear superposition. The Time Domain analysis on the other hand focuses on motions caused by the time-series of the exciting parameters. Perhaps some more clarifications of the terms – the mass including the added mass, the damping and the stiffness – are helpful. The total mass (kg) of a floating body comprises of the mass of water displaced by it, and the mass of the surrounding water called the added mass – which is proportional to the size of the body, and also depends on different motion types. These two masses resist the acceleration of the body. The Damping (N.s/m; Newton = measure of force; s = time, second; m = distance, meter) is a measure of the absorption of the imparted energy by the floating system – having the effect of exponentially reducing its oscillation. Damping is three basic types: damping by viscous action, wave drift motion and mooring system restraining. The stiffness (N/m) is the force required of an elastic body to restore it to the equilibrium position. The terms discussed above refer to rectilinear motions of surge, sway and heave. How about the terms for angular motions of roll, pitch and yaw? The total mass for angular motions becomes the mass moment of inertia, and the stiffness is replaced by the righting moment (moment is the product of force and its distance from the center). In the cases of environmental and passing vessel excitations, gravity and mooring restraints try to restore the stability of the vessel. The roles of these restoring elements are like this:

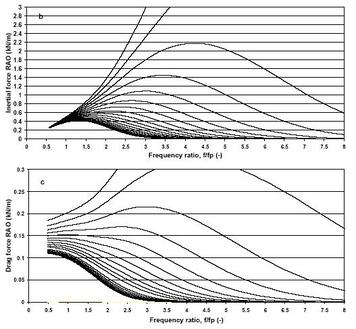

A few words on the passing vessel effect. A speeding vessel passing past a moored vessel causes surge and sway loads and yaw moment on the latter. The magnitudes of them depend on the speed of the passing vessel, the distance between the two, and the underkeel clearance of the moored vessel. As a simple explanation involving the ships in a parallel setting: a moored vessel starts to feel the effect when the passing vessel appears at about twice the length of the former, and the effect (push-pull in changing magnitude and phase) continues until cleared of this passing away distance. Analysis shows that sway pull-out is the highest when the passing vessel is at the midship of the moored vessel – but the surge and the yaw are the lowest at this position. . . . Well so far so good. On some aspects of mooring now. Mooring or station keeping comprises of two basic types – fleet and fixed moorings. The former refers to systems that use primarily the tension members such as ropes and wires, and is mostly applied in designated port offshore anchorage areas. Ports have designated outer single-point anchorage areas where ships can wait for the availability of port berthing, and/or for loading from a feeder vessel and unloading to a lighterage vessel. The area is also used to remain on anchor or on engine-power during a storm. Ships can cast anchors in those areas or moor to an anchorage buoy. For a single point mooring on anchor a large mooring circle is needed to prevent the anchored ship from colliding with the neighbouring vessels. Assuming very negligible drifting of the anchor, the radius of this influence-circle depends on water depth, anchor-chain catenary and ship’s overall length. Anchoring to a moored buoy by a hawser reduces this radius of influence-circle of the moored ship. Buoy facilities are usually placed offshore for mooring of tankers, and such buoys are equipped with multiple cables and hoses to cater to the logistical needs of the vessel as well as for loading and unloading petroleum. A vessel moored at a single point is free to swing or weather-vane following the prevailing weather and current to align itself bow-on. The weather vaning is advantageous because it minimizes the vessel area exposed to wind, wave and current loads. Fixed Mooring refers to a system that uses both tension (ropes and wires) and compression members (energy absorbing fenders). A different type of fixed mooring, mostly implemented in rather calm environmental settings of current and wave is implemented in marinas. The floats of marinas are usually anchored via collars to vertical mono-piles. Only a single-degree of freedom is provided in this system – which means that the floats move rather freely vertically up and down with changing water levels. Typical fixed moorings include tying the ship at piers (port structures extending into the water from the shore), wharves (port structures on the shore), and dolphins (isolated fixed structures in water) together with loading platforms. The latter is mostly placed in deepwater by configuring the alignment such that moored ships will largely be able to avoid beam seas, currents and wind. The tying is implemented by wires and ropes – some are led from the ship winches through fair leads to the tying facilities like bollards, bitts or cleats on the berthing structures. Wires and ropes are specified in terms of diameter, material, type of weaving and the minimum breaking load (MBL). The safe working load (SWL) is usually taken as a fraction of MBL - some 0.5 MBL or lower. The mooring lines are spread out (symmetrically about the midship) at certain horizontal and vertical angles. Typically, the spring lines (closed to midship mostly resisting the longitudinal motions) spread out at an angle no more 10 degrees from the x-axis; the breasting lines (between spring and bow/stern lines, mostly resisting the lateral motions) spread out at an angle no more than 15 degrees from the y-axis; and the bow and stern lines are usually laid out at 45 degrees. The maximum vertical line angles are kept within 25 degrees from the horizontal. The key considerations for laying out mooring lines are to keep spring lines as parallel as possible; and breast lines as perpendicular as possible to the ship’s long axis. When large ships are moored with wires, a synthetic tail is attached to them to provide enough elasticity to the vessel motions. . . . This piece ended up longer than I anticipated. Let me finish it by quoting Nikola Tesla (1856 – 1943) – the famous inventor, engineer and physicist: If you want to know the secrets of the universe, think in terms of energy, frequency and vibration. . . . . . - by Dr. Dilip K. Barua, 15 September 2017  Most of the materials in this piece are based on my 2008 ISOPE (International Society of Offshore and Polar Engineers) paper: Wave Loads on Piles – Spectral Versus Monochromatic Approach. This paper discusses, for both monochromatic and spectral waves, how the Morison forces (Morison and others, 1950) compare for a surface piercing round vertical pile in some cases of waves with Ursell Numbers (Fritz Joseph Ursell, 1923 – 2012), U in the order of 5.0. I have included an image (courtesy ISOPE) from this paper showing inertial and drag force RAOs as a function of frequency. The RAO or Response Amplitude Operator represents the maximum force for a unit wave height. . . . Standing in the nearshore water of unbroken waves, one experiences a shoreward push when a wave crest passes and a seaward pull when a wave trough passes – and if the waves happen to be large he or she may experience dislodging from the foothold. The immediate instinct is to recognize the power of a wave in exerting forces on members standing on its way – resisting its motion. How to estimate these forces? Do structures of all different sizes experience the same type of forces? The answer to the first question depends on how well one answers the second question – how the structure sizes up with the wave – the wave length (L) to be exact. It turns out that the nature of wave forces on a structure can be distinguished based on the value of a parameter known as the diffraction parameter – a ratio of the structure dimension (D) perpendicular to the direction of wave advance, and the local wave length, L. When D is less than about 1/5th of L, the structure can be treated as slender and wave forces can be determined by the Morison equation. . . . In this piece let us attempt to see how the Morison forces work – how the forces apply in considerations of both monochromatic and spectral waves. I will also touch upon the nonlinear wave forces. Slender structures exist in many port and offshore installations as vertical structures – as mono pile and pile-supported wharfs in ports – as gravity platforms and jacket structures in offshore structures – and as horizontal structural members and pipelines. What are the Morison forces? They are the forces caused by the wave water particle kinematics – the velocity and acceleration. The two kinematics causing in-line drag and inertial horizontal forces are hyperbolically distributed over the height of a vertical standing structure – decreasing from the surface to the bottom. For a horizontal pipeline, the loads include both the in-line horizontal forces as well as the hydrodynamic vertical lift force. More about these to-and-fro wave forces? The drag force is due to the difference in the velocity heads between the stoss and lee sides of the structure; and the inertial force is due to its resistance to water particle acceleration. The hydrodynamic lift force is due to the difference in flow velocities between the top and bottom of a horizontal structure. I will attempt to talk more about it at some other time. Do the slender members change the forcing wave character? Well while the structures provide the resistances by taking the forces upon themselves; they are not able to change the character of the wave – because they are too small to do so. From the perspectives of structural configuration, when a vertical member is anchored to the ground but free at the top, it behaves like a cantilever beam subjected to the hyperbolically distributed oscillating horizontal load. When rigid at both ends, the member acts like a fixed beam. For a horizontal pipeline supported by ballasts or other rigidities at certain intervals, it also acts like a fixed beam with the equally distributed horizontal drag and inertial forces and the vertical hydrodynamic lift forces. . . . Before entering into the complications of spectral and nonlinear waves, let us first attempt to clarify our understanding of how linear wave forces work. We have seen in the Linear Waves piece on the NATURE page that the wave water particle orbital velocity is proportional to the wave height H, but inversely proportional to the wave period T. The water particle acceleration is similarly proportional to H, but inversely proportional to T^2. The nature of proportionality immediately tells us that waves of low steepness (H/L) have lower orbital velocities and accelerations – therefore they are able to cause less forces than the waves of high steepness. For symmetric or linear waves, the orbital velocity and acceleration are out of phase by 90 degrees. In the light of Bernoulli Theorem (Daniel Bernoulli, 1700 – 1782) dealing with the dynamic pressure and velocity head, the drag force is proportional to the velocity squared. Both the drag and inertial forces must be multiplied by some coefficients to account for the structural shape and for viscosity of water motion at and around the object. Many investigators devoted their times to find the appropriate values of drag and inertia coefficients. A book authored by T. Sarpkaya and M. Isaacson published in 1981 has summarized many different aspects of these coefficients. Among others the coefficients depend on the value of Reynolds Number (Osborne Reynolds, 1842 – 1912) – a ratio of the product of orbital velocity and structure dimension to the kinematic viscosity. The dependence of the forces on the Reynolds Number suggests that a thin viscous sublayer develops around the structure – and for this reason the Morison forces are also termed as viscous forces. The higher the value of the Reynolds Number, the lower are the values of the coefficients. The highest drag and inertial coefficients are in the range of 1.2 and 2.5, respectively, but drag coefficients as high as 2.0 have been suggested for tsunami forces. . . . How do the drag and inertial forces compare to each other? Two different dimensionless parameters answer the question. The first is known as Keulegan-Carpenter (G.H. Keulegan and L.H. Carpenter, 1958) Number KC; it is directly proportional to the product of wave orbital velocity and period and inversely proportional to the structure dimension. It turns out that when KC > 25 – drag force dominates, and when KC < 5 inertia force dominates. The other factor is known as the Iversen Modulus (H.W. Iversen and R. Balent, 1951) IM, is a ratio of the maximums of inertia and drag forces. It can be shown that both of these two parameters are related to each other in terms of the force coefficients. While the horizontal Morison force is meant to result from the phase addition of the drag and inertial forces, which are 90 degrees out of phase, the conventional engineering practice ignores this scientific fact, instead adds the maximums of the two together. This practice adds a hidden factor of safety (HFS) in design forces. For example, a 2-meter, 12-second wave acting on a 1-meter vertical structure standing at 20-meter of depth (U = 6.4) would afford a HFS of 1.45. However HFS varies considerably with the changing values of IM – with the highest occurring at IM = 1.0, but decreases to unity at very high and low values of IM. How does the wave nonlinearity affect the Morison forces? We have seen in the Nonlinear Waves piece on the NATURE page that the phase difference between the velocity and acceleration shifts away from 90 degree – with the increasing crest water particle velocity and acceleration. For the sake of simplicity, let us focus on a 1-meter high 8-second wave, propagating from the region of symmetry at 10 meter water depth (U = 5.0) to the region of asymmetry at 5 meter water depth (U = 22.6). By defining and developing a relationship between velocity and acceleration with U, it can be shown the maximum linear and nonlinear forces are nearly equal to each other at U = 5.0. But as the wave enters into the region of U = 22.6, the nonlinear drag force becomes 36% higher than the linear drag force. The nonlinear inertia force becomes 8% higher than the linear one. With waves becoming more nonlinear in shallower water, the percentage increases manifold higher than the ones estimated by the linear method. While the discussed method provides some insights on the behaviors of nonlinear Morison forces, USACE (US Army Corps of Engineers) CEM (Coastal Engineering Manual) and SPM (Shore Protection Manual) have developed graphical methods to help estimating the nonlinear forces. . . . Now let us turn our attention to the most difficult part of the problem. What happens to the Morison forces in spectral waves? How do they compare with the monochromatic forces? To answer the questions, I will depend on my ISOPE paper. The presented images from this paper shows the inertial and drag force RAOs over the 20-meter water depth at 1-meter interval from the surface to the bottom – for a 2-meter high 12-second wave acting on a 1-meter diameter round surface-piercing vertical pile. The forcing spectral wave is characterized by JONSWAP (Joint North Sea Wave Project) spectrum (see Wave Hindcasting). For this case, the RAOs are the highest at frequencies about 3.5 times higher than the peak frequency (fp) of 0.08 Hertz (or 12-second). This is interesting because the finding is contrary to the general intuition that wave forces are high at the frequency of the peak energy – the period effects on wave kinematics! As the frequency decreases, the inertial force RAO diminishes tending to zero. The drag force RAO, on the other hand, tends to reach a constant magnitude as the frequency decreases. This finding confirms that at low frequency motions of tide and tsunami, the dominating force is the drag force (which is also true for cases when KC > 25.0). How do the spectral wave forces compare with the monochromatic wave forces? It turns out that for the case considered, the monochromatic method underestimates the wave forces by about 9%. A low difference of this order of magnitude is good news because one can avoid the rigors of the spectral method to overcome such a small difference – the difference of this magnitude is in the range of typical uncertainties of many parameters. . . . . . - by Dr. Dilip K. Barua, 17 November 2016  We have talked about the Natural waves in four blogs – Ocean Waves, Linear Waves, Nonlinear Waves and Spectral Waves on the NATURE page, and in the Transformation of Waves piece on the SCIENCE & TECHNOLOGY page. In this piece let us turn our attention to the most dynamic and perhaps the least understood region of wave processes – the surf zone – the zone where waves dump their energies giving births to something else. What is the surf zone? The Surf zone is the shoreline region from the seaward limit of initial wave breaking to the shoreward limit of still water level. The extent of this zone shifts continuously – to shoreward during high tide and to seaward during low tide – to shoreward during low waves and to seaward during high waves. The wave breaking leading to the transformation – from the near oscillatory wave motion to the near translatory wave bores – is the fundamental process in this zone. Note that by the time a deep-water spectral wave arrives at the seaward limit of the surf zone, its parent spectrum has already evolved to something different, and the individual waves have become mostly asymmetric or nonlinear. In the process of breaking a wave dumps its energy giving birth to several responses – from the reformation of broken waves, wave setup and runup, and infragravity waves – to the longshore, cross-shore and rip currents – to the sediment transports and morphological changes of alluvial shores. The occurrence, non-occurrence or extents of these responses depends on many factors. It is impossible to treat all these processes in this short piece. Therefore I intend to focus on some fundamentals of the surf zone processes - the processes of wave breaking and energy dissipation. . . . How are the surf zone processes treated in mathematical terms? Two different methods are usually applied to treat the problem. The first is based on the approximation of the Navier-Stokes (French engineer Claude-Louis Navier, 1785 – 1836; and British mathematician George Gabriel Stokes, 1819 – 1903) equation. In one application, it is based on the assumption that the convective acceleration is balanced by the in-body pressure gradient force, wave forcing and lateral mixing, and by surface wind forcing and bottom frictional dissipation. The second approach is based on balancing two lump terms – the incoming wave energy and the dissipated energy. We have seen in the Linear Waves piece on the NATURE page that wave energy density is proportional to the wave height squared. In a similar fashion, the dissipated wave energy is proportional to the breaking wave height squared multiplied by some coefficients. Both the approaches are highly dependent on the empirical descriptions of these coefficients – making the mathematical treatment of the problem rather weak. . . . Let us focus on the energy approach – how the breaking energy dissipation occurs in the surf zone. Many investigators were involved in formulating this phenomenon. For individual monochromatic waves, the two most well-knowns are: the one proposed by M.J.F. Stive in 1984 and the other one proposed by W.R. Dally, R.G. Dean and R.A. Dalrymple in 1985. It was the energy dissipation formulation by J.A. Battjes and J.P.F.M. Janssen in 1978 that addressed the energy dissipation processes of spectral waves. This formulation required an iterative process, and was therefore cumbersome to apply. However with the modern computing power, that hurdle does not exist any more. In addition to the aforementioned investigators, there are many others who modified and refined the formulations and understandings of the surf zone processes. In this piece let us attempt to see how the energy dissipation works for spectral waves. Among the coefficients influencing the energy dissipation is a factor that defines how fractions of the spectral wave group break. This factor is very important, and let my try to illustrate how that works – by solving the term iteratively. . . . Before doing that let me highlight some other understandings of the surf zone processes. Early investigators of the surf zone processes have noticed some important wave breaking behaviors – that all waves do not break in the same fashion and on all different beach types. Their findings led them to define an important dimensionless parameter – the Surf Similarity Number (SSN) – also known as the Iribarren Number (after the Spanish engineer Ramon Cavanillas Iribarren, 1900 – 1967; C.R. Iribarren and C. Nogales, 1949) – or simply the Wave Breaking Number. This number is the product of beach slope and wave steepness – and is directly proportional to the beach slope and inversely proportional to the square root of wave steepness (steepness is the ratio of wave height and wave length – long-period swells are less steep than short-period seas). Either of these two parameters could define a breaker type. To identify the different breaker types it is necessary to define some threshold SSN values. Among the firsts to define the threshold values were C.J. Galvin (1968) and J.A. Battjes (1974), but more lights were shed by many other investigators later. On the lower side, when the SSN is less than 0.5, the type is termed as Spilling Breaker – it typically occurs on gently sloping shores during breaking of high-steepness waves – and is characterized by breaking waves cascading down shoreward creating foamy water surface. On the upper side, when the SSN is higher than 3.3, the type changes to Surging and Collapsing Breakers. In a surging breaker, waves remain poorly broken while surging up the shore. In a collapsing breaker, the shoreward water surface collapses on the unstable and breaking wave crest. Both of these breakers typically occur in steep shores during periods of incoming low-steepness waves. When the SSN ranges between 0.5 and 3.3, the type becomes a Plunging Breaker – it typically occurs on intermediate shore types and wave steepness – and is characterized by curling of shoreward wave crest plunging and splashing on to the wave base. This type of breaker causes high turbulence, and sediment resuspension and transport on alluvial shores. An example of sediment transport processes and associated uncertainties in the surfzone is in the Longshort Sand Transport. . . . Perhaps it is helpful if we think more for a while, of what happens in the surf zone – as one watches the incoming waves – high and low – breaking at different depths propagating on to the shore. What prompts wave breaking? The shallow water wave-to-wave interaction – Triad lets wave spectrum evolve into a narrow band shifting the peak energy to high frequencies. The concentration of wave energies lets a wave-form to become highly nonlinear and unsustainable as the water particle velocity exceeds the celerity (square root of the product of acceleration due to gravity and depth), and right before breaking it takes the shape of a solitary wave (a wave that does not have a definable trough). I have tried to throw some lights on this breaking process in my short article published in the 2008 ASCE Journal of Waterway, Port, Coastal and Ocean Engineering {Discussion of ‘Maximum Fluid Forces in the Tsunami Runup Zone’}. Further, we have discussed in the Transformation of Waves piece on this page that a wave cannot sustain itself when it reaches the steepness at the threshold value of 1/7 and higher. A criterion proposed by Miche [Le pouvoir reflechissant des ouvrages maritimes exposes a l’action de la houle. 1951] captures the wave breaking thresholds not only due to the limiting steepness, but also due to the limiting water depths in shoaling water. In 1891, J. McCowan has shown that wave breaks when its height reaches 4/5th of the water depth on flat bottom. Now that we know the wave breaking types and initiation, let us try to understand how the energies brought in by the waves are dissipated. To illustrate the process I have included an image showing the percentage of fractional energy dissipation, as two spectral waves – a 2-meter 6-second and a 2-meter 12-second – propagate on to the shore. As demonstrated in the image, spectral waves begin to lose energy long before the final breaking episode happens. This means that the transformation process lets the maximums of the propagating spectrum to break as they reach the breaking threshold. In addition, as expected the shorter period waves (see the 6-second red line) lose more energy on way to the shallow water than the longer period (see the 12-second blue line) ones. By the final breaking, all the energies are dissipated giving births to other processes (water level change, nearshore currents and sediment transport). On a 1 to 10 nearshore slope, the SSN for the two cases are 0.5 and 1.1, respectively – indicating a Plunging Breaker type – but at two different scales. On most typical sandy shores, the long period waves are more likely to end up being a Plunging Breaker than the short-period ones. At the final stage, the breaking wave heights are 76% and 79% of water depths for the two cases. . . . Does anyone see another very important conclusion from this exercise? Well this conclusion is that, for any given depth and wave height the long-period waves bring in more energy to the shore than the shorter ones – the period effect. Let us attempt to see more of it at some other time. Some more insights on the surf zone waves are provided by Goda (Yoshima Goda, 1935 – 2012) describing a graphical method to help determine wave height evolution in the surf zone. His graphs show, for different wave steepness and nearshore slopes, how the maximum and the significant wave heights evolve on way from the deep water to the final breaking – and then to the reformed waves after final breaking. . . . . . - by Dr. Dilip K. Barua, 10 November 2016  In this piece let us talk about another of Ocean’s Fury – the Storm Surge. A storm surge is the combined effects of wind setup, wave setup and inverse barometric rise of water level (the phenomenon of reciprocal rise in water level in response to atmospheric pressure drop). Also important in the surge effects is tide because the devastating disasters occur mostly when the peak surge rides on high tide (the superimposition of tide and storm surge is known as storm tide). The wind setups as a minor contributor to water level rise occur in most coastal water bodies during the periods of Strong Breeze (22 – 27 knot; 1 knot = 1.15 miles/h; 1.85 km/h; 0.51 m/s) and Gale Force (28 – 64 knot) winds (see Beaufort Wind Scale) – during winter storms and landward monsoons – and are measurable when the predicted tide is separated from the measured water levels. Such setups and seiche (standing wave-type basin oscillation responding to different forcing and disturbances) are visible in water level records of many British Columbia tide gauges. Storms are accompanied by high wave activities, consequently wave setups are caused by breaking waves. Wave setup is the super elevation of the mean water level – this elevation rises from the low set-down at the wave breaker line. Let us attempt to understand all these different aspects of a storm surge – but focusing only on Hurricane (wind speed > 64 knot) scale storms. . . . I have touched upon the phenomenon of storm surge in the Ocean Waves piece on the NATURE page telling about my encounter with the 1985 cyclonic storm surge on Bangladesh coast. Later my responsibilities led me to study and model some storm surges – surges caused by Hurricane ISABELLE (CAT-2, September 18, 2003), Hurricanes FRANCES (CAT-2, September 5, 2004) and JEANNE (CAT-3, September 26, 2004), Hurricane IKE (CAT-2, September 12, 2008) on the U.S. coasts. Some materials of my U.S. experiences are presented and published (Littoral Shoreline Change in the Presence of Hardbottom – Approaches, Constraints and Integrated Modeling, FSBPA 2009; Sand Placement Design on a Sand Starved Clay Shore at Sargent Beach, Texas, ASBPA 2010 [presented by one of my young colleagues at Coastal Tech]; and Integrated Modeling and Sedimentation Management: the Case of Salt Ponds Inlet and Harbor in Virginia, Proceedings of the Ports 2013 Conference, ASCE). . . . In response to managing the storm effects, many storm prone coastal countries have customized modeling and study tools to forecast and assess storm hazard aftermaths. The examples are the FEMA numerical modeling tool SLOSH (Sea Lake and Overland Surges and Hurricanes) and GIS based hazard effects analysis tool HAZUS (Hazards U.S.). The SLOSH is a coupled atmospheric-hydrodynamic model developed by the National Hurricane Center (NHC) at NOAA. The model does not include storm waves and wave effects as well as rain flooding. NHC manages a Hurricane database HURDAT to facilitate studies by individuals and organizations. WMO-1076 is an excellent guide on storm surge forecasting. . . . What are the characteristics of such a Natural hazard – of the storm surge generating Hurricanes? The Hurricanes (in the Americas), Cyclones (in South, Southeast Asia and Australia) or Typhoons (in East Asia) are a tropical low pressure system fed by spiraling winds and clouds converging toward the low pressure. Perhaps an outline of some of the key characteristics will suffice for this piece.